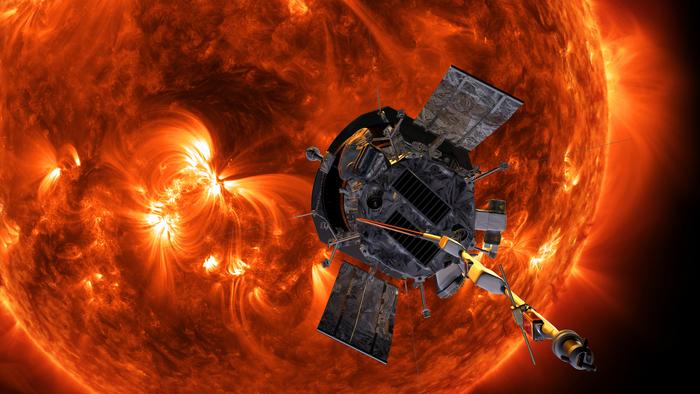

NASA’s James Webb Space Telescope has revolutionized our understanding of the universe, revealing its secrets and shedding light on its evolution over billions of years. Among its significant discoveries, the telescope has now unveiled a crucial factor contributing to the evolution of the early universe. This article explores the fascinating findings brought forth by the James Webb Telescope and the implications they hold for our understanding of cosmic history.

Understanding the Transformed Early Universe: Galaxies, as we perceive them today, were vastly different in the past. Once teeming with life, these galaxies housed bright, shining stars. Additionally, the composition of the universe’s gas has undergone a profound transformation. Astronomers explain that the gas was more opaque, hindering the penetration of energetic starlight. Observing the universe during that era would have presented a less clear view. However, something has evidently changed over the course of billions of years.

Stars’ Heat Driving Evolution: New data gathered from the James Webb Telescope suggests that the early universe’s transformation into its current state was primarily driven by the heat generated by stars within those early galaxies. Research conducted by Simon Lilly’s team at ETH Zürich in Switzerland indicates that this period, known as reionization, marked a time of remarkable changes. The heat emitted by growing and radiant stars ionized the surrounding gas, leading to the creation of the clearer gas prevalent today. Scientists have long sought an explanation for this transition phase that rendered galaxies more visible.

Webb Telescope’s Insights: The recent data collected by the James Webb Telescope provides valuable insights into the universe’s evolution. As stars heated the surrounding gas, the early universe underwent significant changes. The end of the reionization period brought about a profound transformation, resulting in less opaque and more transparent gas. This crucial period of evolution likely occurred over 13 billion years ago, as described in a NASA report.

The Role of Massive Black Holes; To uncover this discovery, researchers focused on a quasar hosting one of the most massive black holes known in the early universe, estimated to be 10 billion times the mass of the Sun. While this black hole still holds mysteries of its own, it has contributed to astronomers’ understanding of what drove the early universe’s evolution.

Thanks to the James Webb Space Telescope, astronomers have gained valuable insights into the early universe’s evolution. The telescope’s data suggests that the heat emitted by stars played a significant role in transforming the gas, leading to a clearer and more observable universe. These findings have far-reaching implications for our understanding of cosmic history and provide a stepping stone toward unraveling the mysteries of the universe’s past.

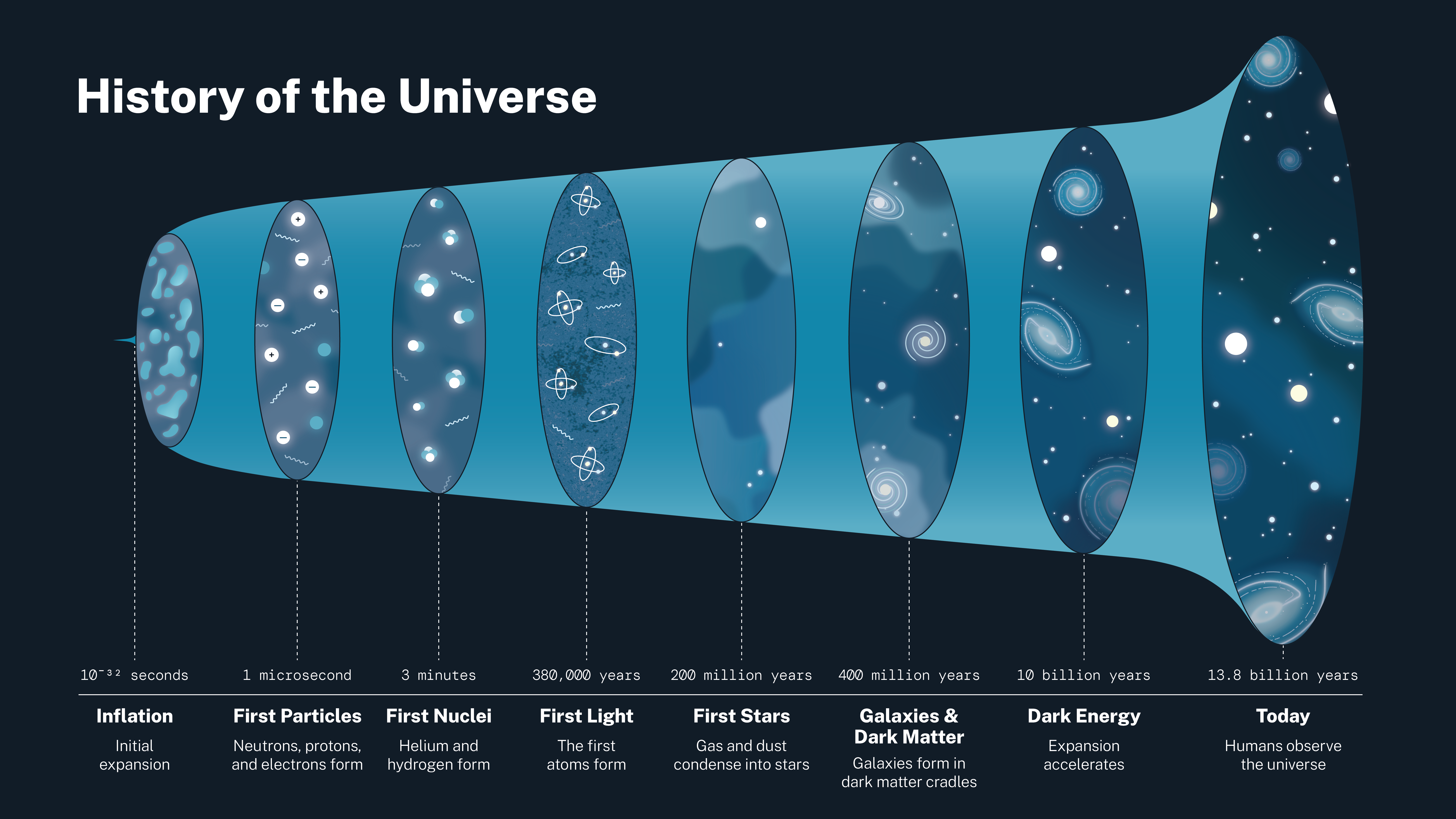

- What are the stages of the early universe?

The stages of the early universe refer to the different periods of its evolution. These stages can be broadly categorized as follows:

- Inflationary Epoch: A brief phase of rapid expansion immediately after the Big Bang.

- Quark Epoch: The universe is filled with a quark-gluon plasma, consisting of fundamental particles.

- Electroweak Epoch: Electroweak symmetry breaks, separating electromagnetic and weak forces.

- Particle Era: The formation of elementary particles and antiparticles, such as protons and neutrons.

- Nucleosynthesis: The formation of light atomic nuclei, like hydrogen and helium.

- Photon Epoch: The universe is dominated by energetic photons.

- Matter Era: Particles, such as electrons and protons, combine to form neutral atoms.

- Galaxy Formation Era: Gravity causes matter to cluster, forming galaxies and other large structures.

- What are the 8 eras of the early universe?

The early universe can be divided into eight distinct eras based on its evolution:

- Planck Era: The earliest era, characterized by extreme temperatures and energies.

- Grand Unification Era: Forces begin to separate, but gravity remains unified.

- Inflationary Era: Rapid expansion of space, resolving some cosmological problems.

- Electroweak Era: Electromagnetic and weak forces become distinct.

- Quark Era: Quarks and gluons dominate the universe.

- Hadron Era: Quarks combine to form protons, neutrons, and other particles.

- Lepton Era: Leptons, such as electrons and neutrinos, dominate the universe.

- Photon Era: Photons become the primary constituent of the universe.

- What happened first in the early universe?

In the early universe, the first significant event was the Big Bang, which marked the beginning of spacetime and the expansion of the cosmos. During the initial moments, the universe underwent a period of rapid inflation, followed by the formation of fundamental particles, including quarks and gluons. As the universe cooled down, protons, neutrons, and other particles emerged, eventually leading to the formation of light atomic nuclei. Over time, as the universe expanded and cooled further, matter started to cluster, giving rise to the formation of galaxies, stars, and planets.

- What is the early history of the universe?

The early history of the universe refers to the period immediately after the Big Bang and encompasses its various stages and evolutionary milestones. It begins with the inflationary epoch, where the universe experienced rapid expansion, followed by the emergence of elementary particles during the quark and electroweak epochs. As the universe cooled down, nucleosynthesis occurred, resulting in the formation of light atomic nuclei. Subsequently, the universe entered the photon epoch, dominated by energetic photons. The matter era commenced as particles combined to form neutral atoms, leading to the formation of galaxies and large-scale structures. This early history sets the foundation for the subsequent evolution and development of the universe as we know it.