Brooklyn federal court has charged two defendants, Eliahou Paldiel and Carlos Arturo Suarez Palacios, with wire fraud and money laundering conspiracies. These accusations stem from a wide-ranging scheme to hack rideshare apps and exploit both riders and companies. The defendants are accused of selling hacked smartphones and fraudulent applications, allowing drivers to manipulate the Uber app to their advantage. If convicted, Paldiel and Palacios could face up to 20 years in prison for each of the two counts. Both have pleaded ‘not guilty’ and were released on $210,000 bonds.

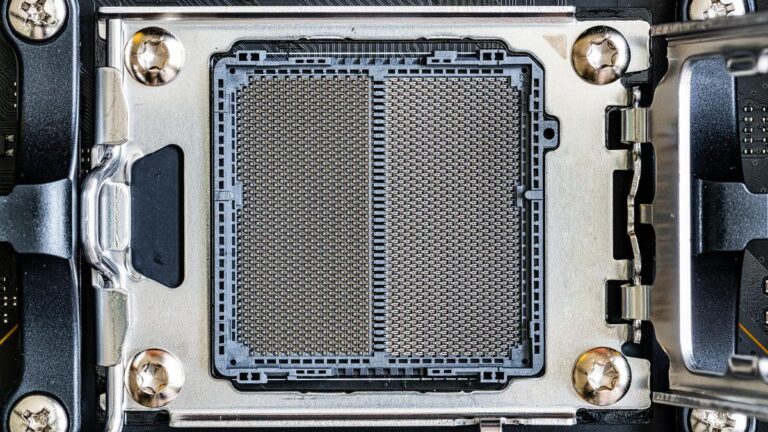

This case highlights a growing issue within the rideshare industry: the use of jailbroken phones to game the system. Many drivers were allegedly paying $300 a month to the defendants to jailbreak their iPhones, mostly older models, in exchange for access to this illegal advantage.

The Mechanics of the Scheme

So, how did this rideshare app hacking scheme work? Jailbroken phones allowed drivers to see critical information hidden from regular users. For instance, when a driver received a ride request, the jailbroken phone displayed key details such as:

- Drop-off location

- How much the rider paid

- Surge pricing alerts

- ETA and route details

With these insights, drivers could selectively accept rides that were more profitable, maximizing their earnings. If the driver accepted a ride, the system functioned as normal. However, if they rejected it, there were no consequences—cancellations didn’t affect their status within the Uber app. This gave drivers the ability to reject lower-paying rides while retaining surge pricing opportunities.

Drivers using this scheme gained an unfair advantage over those who operated within Uber’s normal guidelines. Although Uber typically penalizes drivers for rejecting rides, the hacked system bypassed these rules, allowing drivers to cherry-pick high-profit rides without repercussions.

The Role of Jailbroken Phones

The jailbroken phones were at the heart of the rideshare app hacking scheme. Jailbreaking, a process that removes software restrictions imposed by Apple on iPhones, allowed the defendants to install unauthorized apps and modify the Uber app’s functionality. These modifications gave drivers access to information they otherwise wouldn’t have, including insights that were intended only for Uber’s internal system.

While the drivers using the jailbroken phones weren’t directly stealing money from riders, they were certainly profiting at the expense of other drivers. Some sources have pointed out that this practice enabled drivers to steal high-paying rides from drivers who weren’t using the modified app. Essentially, this scheme allowed them to manipulate Uber’s algorithms to gain a competitive edge.

A Wider Impact

According to reports, the defendants operated not just in New York but also in New Jersey, where additional drivers participated in the scheme. The widespread nature of this operation is alarming, as it exposes vulnerabilities in the rideshare industry that could potentially be exploited on a larger scale. The case demonstrates how drivers could game Uber’s system, putting honest drivers at a disadvantage.

Jailbreaking and Uber’s Response

Uber has been battling various forms of fraud within its platform for years. Jailbreaking phones and tampering with the Uber app violates the company’s terms of service, but the scale of this particular operation is significant. Uber has yet to make a public statement on the specific charges in this case, but it’s clear that this kind of exploitation is a growing concern for the rideshare giant.

It’s important to note that while this scheme did not directly defraud riders, the ripple effects are profound. Honest drivers lost out on profitable rides, and the system that Uber created to match riders with drivers in real time was effectively broken by the actions of a few.

If convicted, Paldiel and Palacios face severe penalties—up to 20 years in prison for each of the two charges of wire fraud and money laundering. The court case will determine the extent of their involvement and the potential repercussions for the thousands of drivers who may have participated in this scheme.

This case serves as a reminder that while technology can enhance our lives, it also opens up new avenues for fraud. The rideshare app hacking scheme demonstrates the lengths some individuals are willing to go to exploit technological loopholes for personal gain.

When drivers were using the app that change your location?

A GPS location-changing app was used in this scheme to manipulate the driver’s location, making it appear as though they were in a surge pricing area. Drivers could either move their location to the surge zone or stay where they were for a period that would equate to the time it would take to drive back from the surge zone, preventing Uber from becoming suspicious. However, the defendants discouraged frequent use of this feature to avoid detection.

Another use of this location manipulation app was by Black SUV drivers, especially at airports. Since these drivers often wait for long periods, they would change their location to appear as if they were already in line for rides, even while at home or on their way to airport parking. This gave them an unfair advantage, allowing them to skip ahead of other drivers who weren’t using the app.

In conclusion, the defendants weren’t actually stealing money from the riders—Uber handles that aspect through their usual pricing system. Instead, the drivers using the app were scamming other honest drivers. By manipulating their GPS location, they secured more profitable rides, leaving those who followed the rules at a disadvantage. The defendants made their profit by charging the scamming drivers for access to the hacked apps. In the end, the only real victims in this scheme were the honest drivers who lost out on fair opportunities.