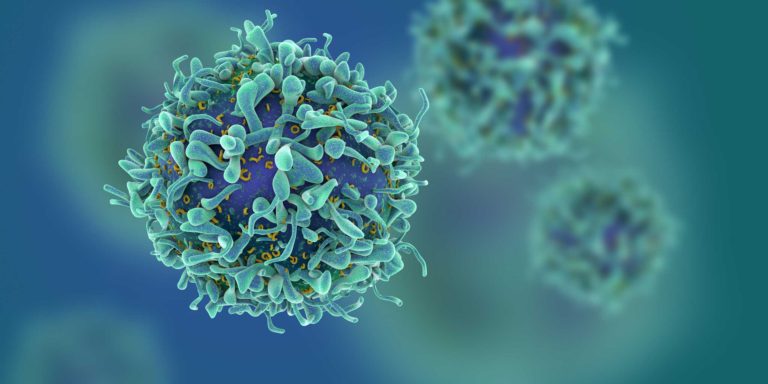

The art of being able to recreate near-perfect replicas of the human T-cell is a pretty astonishing feat that may help us get one step closer towards more effective cancer and autoimmune disease treatments. Ir could also help us gain a better understanding as to how human immune cells behave. Eventually, these manufactured cells could be used to strengthen the immune system for those with cancer or immune deficiencies.

Because of their extremely delicate nature, human T-cells are hard to use in research as they only survive for a few days once they’ve been extracted. “We were able to create a novel class of artificial T-cells that are capable of boosting a host’s immune system by actively interacting with immune cells through direct contact, activation, or releasing inflammatory or regulatory signals,” explains Mahdi Hasani-Sadrabadi, an assistant project scientist at UCLA Samueli. “We see this study’s findings as another tool to attack cancer cells and other carcinogens.”

When an infection enters the body, T-cells become activated and as a result, they begin to flow through the bloodstream to the affected areas. In order to get to where they need to, T cells have to squeeze through small pores and gaps. To do this T cells sometimes deform to as little as a quarter of their size normally. They also have the ability to expand to around three or four times bigger, helping to fight any antigens that are on the attack.

It’s only very recently that bioengineers have been able to successfully replicate human T-cells this closely. The way the team achieved that is by fabricating the T cells using what’s known as a microfluidic system. Combining mineral oil with an alginate biopolymer and water, the researchers created alginate microparticles that replicated the same structure and form as human T cells. Microparticles were then gathered from a calcium ion bath to have their elasticity altered by changing the calcium ion concentration in the bath.

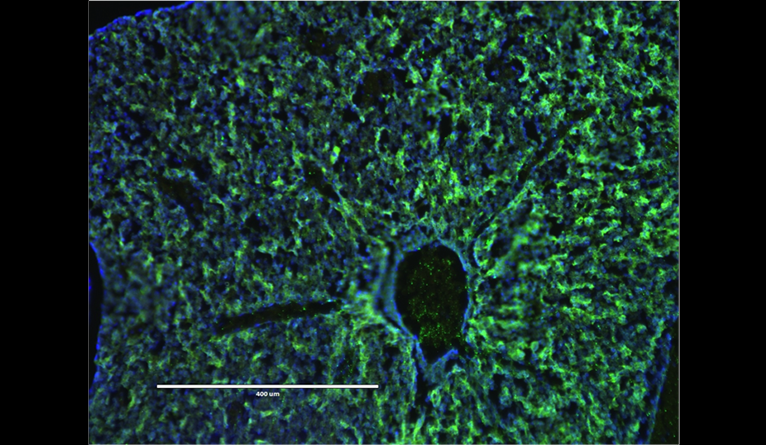

To make the synthetic T-cells have the same infection fighting, tissue penetrating, inflammation regulating properties as natural T-cells, the researchers had to adjust the cells’ biological attributes. The way they did that was by coating the T-cells with phospholipids. They then used a process called bioconjugation to link up the T cells with CD4 signalers, the particles responsible for activating natural T-cells to attack cancer cells or infections.

More News to Read

- UCLA Scientists Report Their Deepest Understanding Yet of the Enzyme Telomerase

- The World’s Most Sensitive Experiment for Dark Matter is Now Underway

- On the Hunt for Clandestine Nuclear Weapon Sites

- Without Teamwork, There will be No Colonizing on Mars

- Engineers Develop A New Cloaking Material That Can Hide Hot Objects