Electron microscopy has allowed scientists to get a much closer look at the properties of individual atoms. The problem is, that even at this resolution, things are still a little fuzzy. Like lenses, electron microscopes have tiny imperfections known as aberrations, and in order to smooth out, these defects scientists must use special aberration correctors.

However, there’s only so much aberration correctors can do and to correct multiple aberrations you would need an endless collector of corrector elements. Thankfully that’s where David Muller, the Samuel B. Eckert Professor of Engineering in the Department of Applied and Engineering Physics (AEP), Sol Gruner, the John L. Wetherill Professor of Physics, and Veit Elser, a professor of physics come in.

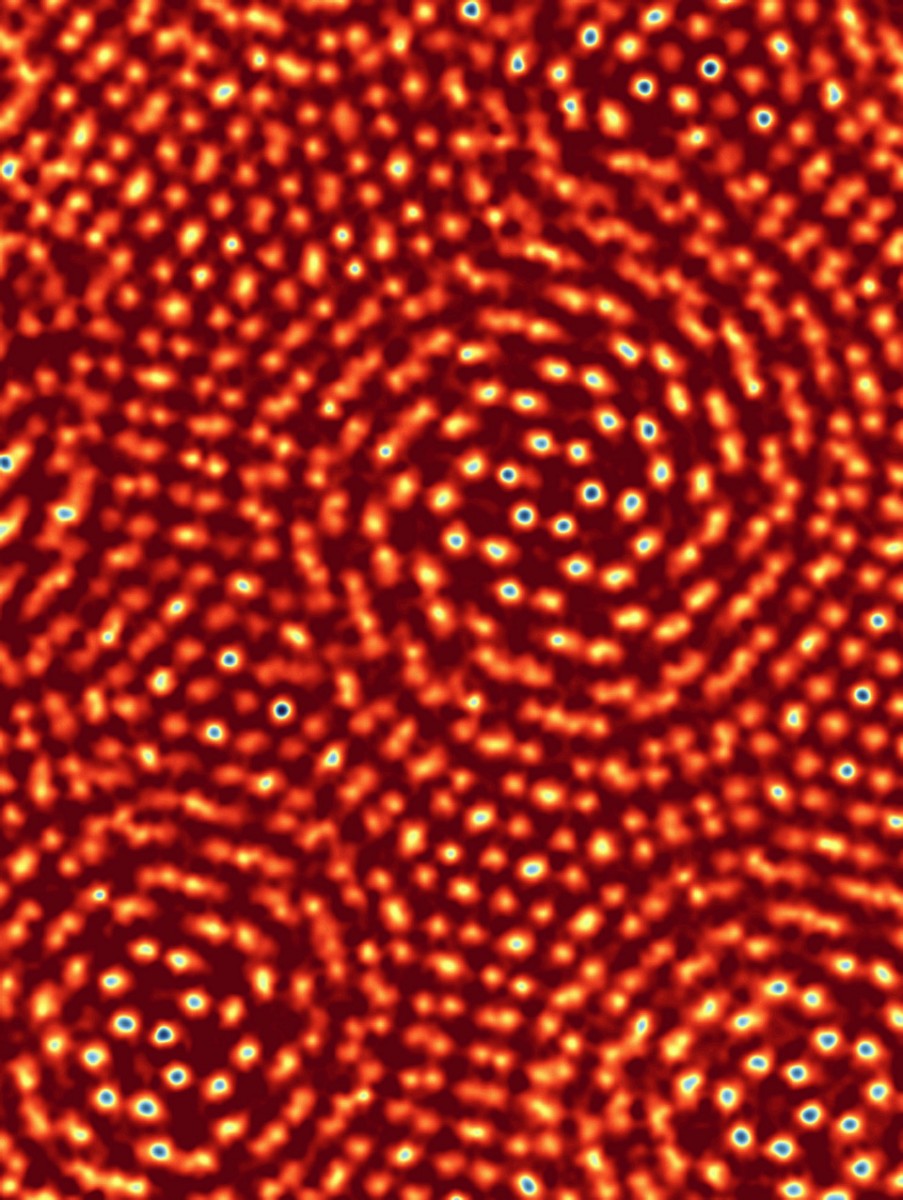

Together the scientists have come up with a new method of achieving ultra-high resolution for their microscope without the need to use any aberration correctors. Using an electron microscope pixel array detector (EMPAD) that was first introduced back in 2017, the team achieved a world record for image resolution using one-atom thick molybdenum disulfide (MoS2). Electron wavelengths are much smaller than visible light wavelengths. The problem is that electron microscope lenses are not very accurate.

However, Image resolution in electron microscopy has improved recently by increasing the amount of energy that makes up the electron beam and the numerical aperture of the lens. The end result: a well-lit subject. Previously scientists achieved records in obtaining sub-angstrom resolution through the use of a super-high beam energy and an aberration-corrected lens. Atomic bonds are typically between 1 and 2 angstroms in length so the sub-angstrom resolution would enable scientists to get a very clear picture of individual atoms.

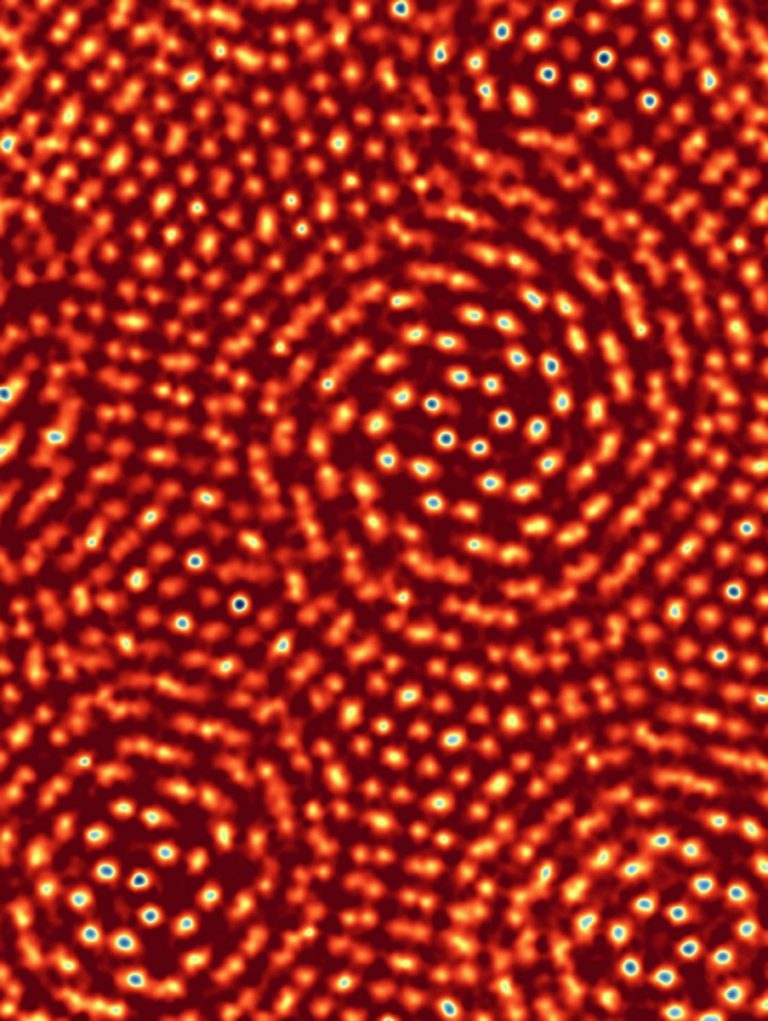

Eventually, the group achieved a resolution of 0.39 angstroms, making a new world record; one at a less damaging beam energy. The team used both ptychography and the EMPAD to achieve these results. Setting the beam energy at just 80 keV the microscope is able to pick up images with the greatest of clarity. With a resolution capability this small, the team needed a new test subject for the EMPAD method.

Stacking two sheets of MoS2 on top of one another, Yimo Han and Pratiti Deb set to work making sure one sheet was slightly askew so that atoms in each sheet were within visible distances of each other. “It’s essentially the world’s smallest ruler,” says Gruner. The EMPAD is capable of recording a wide range of intensities and has now been fitted on various microscopes all across campus.

More News to Read

- 5 Ways Artificial Intelligence Can Make the Construction and Engineering Sector More Efficient

- It’s Raining on the Self-Driving Car Parade

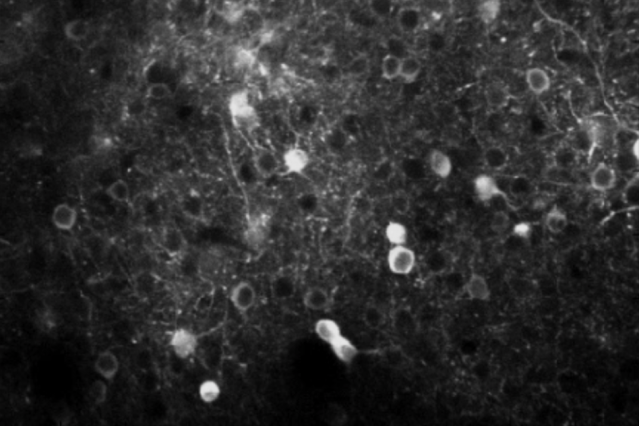

- How the Brain Understands What We See and Knows the Right Action to Take

- Neuroscientists Shed Light on the Role of Certain Genes Associated With Alzheimer’s

- A Breakthrough in Gene Editing Sees Researchers Cure Blood Disorder