Researchers from the Perelman School of Medicine at the University of Pennsylvania have completed an analysis that has proven the powers of proton therapy. In the study, they were able to significantly lower the risk of side effects in cancer patients undergoing proton therapy as opposed to traditional radiation methods. While overall cure rates remained identical, those undergoing the proton therapy had, on average, less unplanned hospitalizations. Overall, the researchers found this new wave of therapy reduced the risk of those side effects by around two thirds.

There are a few key differences when it comes to proton therapy and traditional radiation. The main one being that traditional photon radiation tends to use several x-ray beams in which to blast the tumor with radiation. The problem with this method is that radiation is unavoidably deposited in the healthy surrounding tissue, increasing the risk of further damage. Proton therapy works slightly different as its treatment involves pushing positively charged protons at the tumor. This enables the radiation to get much closer to the target with hardly any being delivered beyond this.

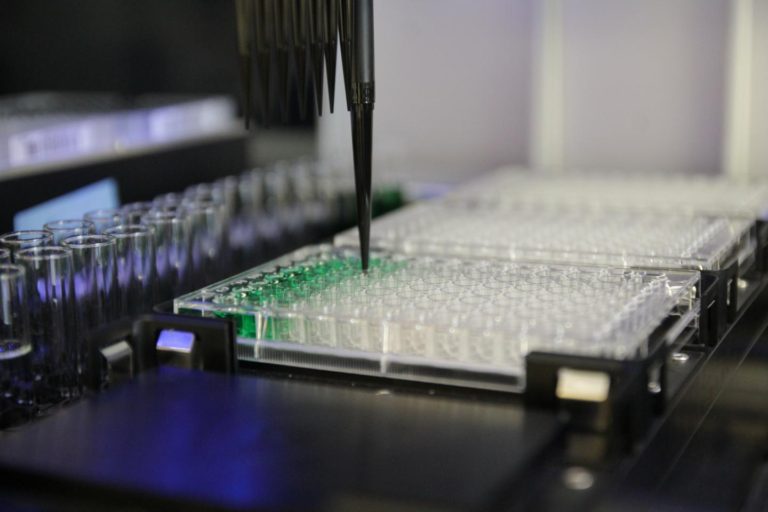

As part of the study, researchers studied various side effects including pain, nausea, diarrhea, or difficulties in breathing or swallowing. However, they only focused on side effects classed as grade-three or higher, meaning those severe enough to mean hospitalization. Out of nearly 1,483 cancer patients receiving both radiation and chemotherapy, 391 were given the proton therapy whereas the remainder received photon radiation.

The main point behind the study was to determine whether or not patients experienced any adverse side effects of a grade-three level or higher, within 90 days of receiving treatment. Results from the study showed that 27.6 percent of patients receiving the traditional photon treatment did, compared to just 11.5 percent of those receiving the new proton therapy.

“We know from our clinical experience that proton therapy can have this benefit, but even we did not expect the effect to be this sizeable,” commented James Metz, MD, leader of the Roberts Proton Therapy Center at Penn, chair of Radiation Oncology, and senior author of the study. And the good thing about proton therapy is that the improvement inexperienced side effects don’t come at the cost of reduced effectiveness, therefore it could potentially improve survival outcomes.

Proton therapy is FDA-approved and showing great promise to become a highly effective and widely used cancer treatment.