Researchers at MIT and McMaster University have made a significant breakthrough in the fight against drug-resistant infections. Using an artificial intelligence algorithm, they have identified a novel antibiotic with the potential to kill Acinetobacter baumannii, a bacterium responsible for numerous drug-resistant infections.

Acinetobacter baumannii is commonly found in hospitals and is known to cause serious infections such as pneumonia, meningitis, and wound infections. It is particularly prevalent among wounded soldiers in conflict zones. The microbe’s ability to survive on surfaces and acquire antibiotic-resistance genes from its environment has made it increasingly challenging to treat.

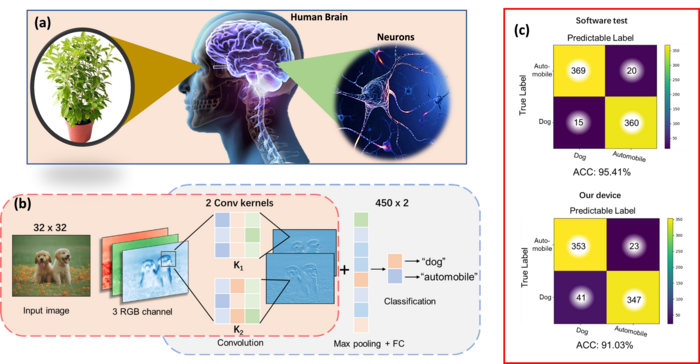

The research team, led by Jonathan Stokes, a former MIT postdoc now an assistant professor at McMaster University, employed a machine-learning model to sift through a library of approximately 7,000 drug compounds. The model was trained to identify chemical compounds that could inhibit the growth of A. Baumannii.

The results were promising, showcasing the potential of AI in accelerating the search for novel antibiotics. James Collins, the Termeer Professor of Medical Engineering and Science at MIT, expressed his excitement about leveraging AI to combat pathogens like A. Baumannii.

The researchers exposed A. Baumannii to thousands of chemical compounds to gather training data for their computational model. By analyzing the structure of each molecule and determining its growth-inhibiting potential, the algorithm learned to recognize chemical features associated with inhibiting bacterial growth.

Once trained, the model analyzed a set of 6,680 compounds from the Drug Repurposing Hub at the Broad Institute. In less than two hours, the algorithm identified several hundred promising compounds. The researchers then selected 240 compounds for experimental testing in the lab, focusing on those with unique structures compared to existing antibiotics.

Nine antibiotics were discovered through these tests, with one compound showing exceptional potency. Originally explored as a potential diabetes drug, this compound, named Abaucin, exhibited remarkable effectiveness against A. Baumannii while sparing other bacterial species. Its “narrow spectrum” capability reduces the risk of rapid drug resistance development and minimizes harm to beneficial gut bacteria.

Further studies demonstrated Abaucin’s ability to treat A. Baumannii wound infections in mice and effectively combat drug-resistant strains isolated from human patients. The researchers identified the drug’s mechanism of action, revealing that it interferes with lipoprotein trafficking—a process crucial for protein transportation within cells.

Although all Gram-negative bacteria express the protein targeted by Abaucin, the researchers found the antibiotic to be highly selective towards A. Baumannii. They speculate that subtle differences in how A. Baumannii carries out lipoprotein trafficking contribute to the drug’s specificity.

The team aims to optimize the compound’s medicinal properties in collaboration with McMaster researchers, with the ultimate goal of developing it for patient use. Furthermore, they plan to employ their modeling approach to identify potential antibiotics for other drug-resistant infections caused by Staphylococcus aureus and Pseudomonas Aeruginosa.

The research was made possible through the support of various funding sources and organizations, highlighting the collaborative efforts in the pursuit of innovative solutions against antibiotic resistance.