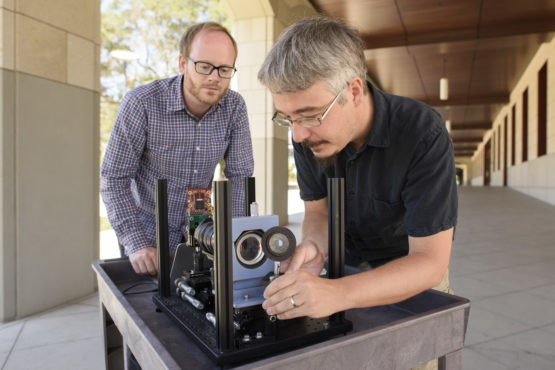

Presented at CVPR this week, the camera designed by Gordon Wetzstein, Donald Dansereau and colleagues at the University of California in San Diego, is the very first light field, single-lens, wide field of view camera intended to improve the vision of robots.

Currently, the cameras used by robots are not the most effective. They gather information in a strictly 2-dimensional method, looking at an environment from multiple perspectives before it can understand the objects materials, and movements around them, not an ideal way to see for driverless cars or drones. The newly designed camera can obtain the same information with only one, clear 4D image.

Dansereau compares the old tech to the new as being like the difference between a peephole and a window. “A 2D photo is like a peephole because you can’t move your head around to gain more information about depth, translucency or light scattering. Looking through a window, you can move and, as a result, identify features like shape, transparency, and shininess,” he said.

The camera technology is based on research done 20 years ago by Pat Hanrahan and Marc Levoy, both professors at Stanford, into light field photography, a type of photography that captures additional light information. Where a typical 2D camera takes an image focused on only one object, light field photography allows a camera to capture a 4D image, one that includes special information like the distance and direction of light to the lens. With this additional information, users can focus the picture to anywhere in the camera’s field of vision, up to 140 degrees with the new camera, after it’s been taken.

Dansereau and Wetzstein hope that robots equipped with their new camera will be able to navigate through rain and other vision obstacles. “We want to consider what would be the right camera for a robot that drives or delivers packages by air,” said Dansereau.

Related Links;

- WIDE-FOV MONOCENTRIC LIGHT FIELD CAMERA | CVPR 2017 For more info.

- A Wide-Field-of-View Monocentric Light Field Camera

- A new camera designed by Stanford researchers could improve robot vision and virtual reality Article source.

More News to Read

- New ESA Mission: Manmade Spacecraft to Orbit the Sun and Detect Gravitational Waves

- UM Solar Car Race Team Wants to Win The American Solar Challenge With Novum

- Could Bacteria-Coated Nanofiber Electrodes be Key to Cleaning Polluted Water?

- First Earth to Orbit Quantum Teleportation Completed

- Quantum Particle Discovery Destroys Previous Results