The human brain is smaller than most people imagine, it can be held in one hand and weighs about 3 pounds. However, the innocuous looking gray matter is infinitely complex and barely understood. But part of the challenge to understanding, despite the number of researchers, scientists, and doctors dedicated to its study, is that there is no central hub of information, mapping, or data gathering for brain research. Soon though, the Human Brain Project (HBP) hopes to change all that.

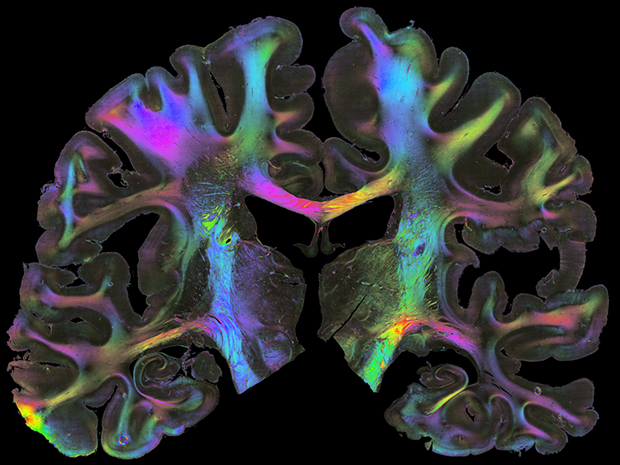

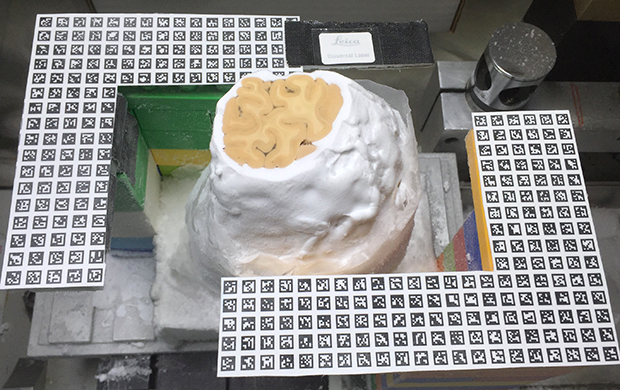

“The brain is too complex to sit in your office and solve it alone,” says Katrin Amunts, a neuroscientist who co-leads the 3D-PLI project at Jülich. The 3D-PLI project, short for a 3D polarized light image, is just one example of hundreds of different brain-related studies happening across the world. The project is imaging slices of a brain, only 60 micrometers wide, to track the orientation of nerve fibers. The images are so detailed that each slice creates 40 gigabytes of data and when the entire 3D digital reconstruction is completed, a single brain will take a few petabytes of storage,

With these scans, combined with the results of studies and experiments around the world, neuroscientists anticipate that fundamental advancements can occur in the field and important questions will be answered. The human brain could be searched and flipped through to examine in detail nerve fibers and see how it’s all related on the cellular level with connections to the most recent, permanent research regarding that part of the brain.

“We don’t have the faintest idea of the molecular basis for diseases like Alzheimer’s or schizophrenia or others. That’s why there are no cures,” says Paolo Carloni, director of the Institute for Computational Biomedicine at Jülich. “To make a big difference, we have to dissect [the brain] into little pieces and build it up again.”

3D Reconstruction: Data from the polarized light imaging of the brain is pieced together by a computer to produce a 3D image of the neuronal fiber tracts (shown here as tubes).

In order to build a central resource, scientists not only need funding, but also the computing hardware and software, and they need most labs and scientist to work together and share freely. The future of neuroscience needs researchers to make the leap from individual projects and labs to a worldwide association. But the road to a concentrated consortium is not an easy one as the Human Brain Project has already discovered.

Funded in 2013 by the European Commission, a badly needed influx of €1 billion sets the Human Brain Project up to provide the infrastructure of such an organization from data analytics and modeling software to powerful computers and simulations.

Initially, the goal for HBP is similar to that of the Human Genome Project, which was an international endeavor that resulted in the complete and searchable model of the human genome. Because of the Human Genome Project, genetic research took a great jump forward in understanding and became a model for sequencing genes in numerous species. In the same way, the Human Brain Project will employ informatics, pioneered by HGP, to create a searchable database for the brain where researchers from California to Italy can use the same deeply detailed data.

Originally conceived of by neuroscientist Henry Markram, who presented his version at a 2009 Ted Talk, the Human Brain Project has gone through a little controversy to get where it is today. Markham wanted to use a supercomputer to simulate the human brain, all hundreds of trillions of synapses of it, inside of 10 years. However, once the funding came in, many scientists criticized the endeavor, other labs refused to join, while more withdrew later on.

In July of 2014, 800 researchers pleaded in an open letter to the European Commission for an independent review of “both the science and the management of the HBP” or threatened to boycott the project.

After a make-over and a 53-page report criticizing the management and science of the HBP, the organization refocused on realistic goals and “concentrate on enabling methods and technologies.” In the two years since then, the Human Brain Project has deterred from simulation and is instead aiming for an immensely detailed map of the brain. “After having trouble at the beginning, we are now on a good road,” said Amunts, scientific research director of the HBP.

“It’s a big relief,” says Henry Kennedy, research director at the Stem-Cell and Brain Research Institute and a signer of the open letter. “They’ve done what they said they would do in the mediation process.”

Unfortunately, Markram is no longer in an executive for the project and is less enthusiastic about the changes and new direction. “I dedicated three years to win the HBP grant, bringing hundreds of scientists and engineers around the focused mission of stimulating the brain,” he said. He still believes in his original vision but also sees the merit still in the existing HBP. “Building many different models, theories, tools, and collecting all kinds of data and a broad range of experiments serves a community vision of neuroscience very well,” he added.

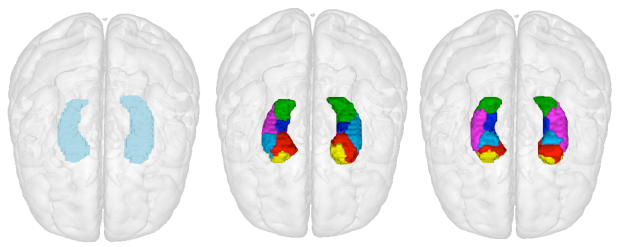

Age and Memory: The hippocampus plays an important role in memory and is one of the brain regions most affected by senile dementia. New imaging methods show that this region’s internal structure differs between that of young adults [middle] and older adults [right].

“Coming up with something premature, selling it big, and having people go to the website and being disappointed is the very last thing we can do now,” Eickhoff says. “It’s on us now to deliver.”

With the pressure to prove viability, HBP researchers are facing a maze of advisory boards, platforms, and subprojects throughout Europe but it’s not the first large-scale neuroscience project to do so. China, Israel, and Japan each have the own neuroscience research projects. And in the United States there is the Allen Institute for Brain Science, started in 2003, as well as the Brain Research through Advancing Innovative Neurotechnologies (BRAIN) Initiatives, started the same year as the HBP in 2013.

But the difference with HBP is its unique approach with informatics. The main part of HPB will be high-powered analytics and computation provided by teams dedicated to the creation of software for the purposes of sharing data on the brain.

Again similar to how the Human Genome Project, which has helped cancer researchers combine and analyze the genomes of a large group of patients to find abnormalities in tumors, neuroscientists hope that with the Human Brain Project they might one day compare brain scans sourced from a number of labs to detect similarities uncommon neurological disorders.

Once the infrastructure is fully operational, researchers will have the ability to coordinate and work together online with easy access to data, like human and rodent brain atlases, and tools. Instead of having to pull random information from various journals, using a neuroinformatics platform, researchers could systematically research data and simultaneously view various types of information, the cell density data and anatomical images of the visual cortex, for example.

Already researchers see interesting results by the duration and combination of various imaging data as Eickhoff’s team composes the HBP brain atlases. While compiling brain scans taken during behavioral experiments the team noticed that the dorsal premotor cortex, previously believed to have a grab bag of functions for spatial attention to motor preparation, was found to instead have distinct elements each with a separate specific function.

A shortage of data is not the problem but the incompatibility of various data sets. “The data is there, but it’s up to us to curate it,” says Timo Dickscheid, the leader of the big-data analytics team at the Institute of Neuroscience and Medicine at Jülich.

His team is building a so-called “Google Brain” where users can zoom into any part of the brain from the folds to neuron paths and back out again. Part of the reason this is such a challenging job is the size and imaging differential between sources and anchoring scale amongst them. For example, they must make it possible to use MRI data of the almond sized amygdala while also easily being able to focus down to the micrometer scale neuron images of two-photon microscopy.

Another challenge the project must face is the disparate methods of classification and naming across the world. An example of this is the hippocampus, a seahorse-shaped area of the brain responsible for memory. As yet scientists do not agree on the specifics of where this part of the brain starts or stops and there are almost 20 diverse names for the different parts of the section. A single authoritative voice needs to unite this information before the HPB’s brain atlas can be completed.

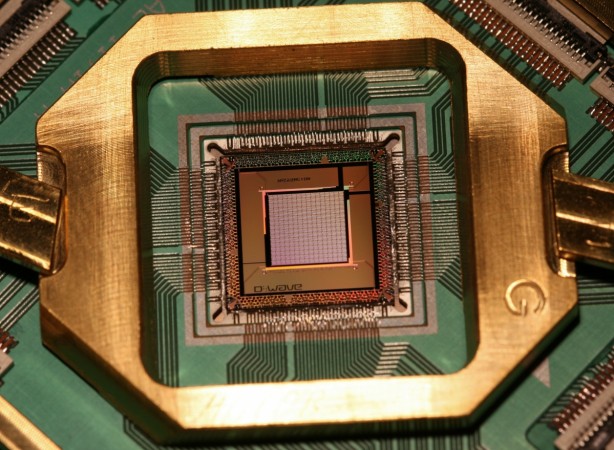

At the same time, the HPB has not given up on some aspects of the simulation. In order to fully capture all levels of the brain, Carloni heads a group working on molecular-simulation to illustrate protein interactions that happen as memories are formed. Such a task requires quantum mechanics and supercomputing technology that was previously unavailable, says Carloni.

Another simulation project is working to better NEST, the Neural Simulation Tool, created by Markus Diesmann and Marc-Oliver Gewaltig in 1994 for brain-scale mathematical models. Still working on NEST, Diesmann and colleagues modeled a full second of activity in a network 1 percent the size of the brain. The experiment of 1.73 billion nerve cells connected by 10.4 billion synapses used up 82, 944 processors on the Japanese K supercomputer in 2013.

“It showed us that this is doable,” says Diesmann, “but it also showed us the limitations.” Aside from being an almost insignificant fraction of the human brain, the 1 second of biological time, too short a time to simulate brain events like memory and learning, took the supercomputer 40 minutes for the supercomputer to recreate. However, due to the superior computing power being provided by the Human Brain Project, Diesmann can work on quicker algorithms and expects an improved NEST in two years.

“The plan in the HBP is to have people working on the infrastructure, but they are surrounded by neuroscientists to keep the infrastructure construction on track,” says Diesmann. Because they want to ensure researchers use the HBP tools, to keep the records up to date and useful, feedback from neuroscientists is essential. In previous large-scale efforts, like Great Britain’s CARMEN, or Code Analysis Repository and Modeling for E-Neuroscience, portal few scientists needed to share or store electrophysiology data sets, and thus very few used it. In the end, the information quickly became out of date, and the platform launched in 2008 isn’t in use today.

At the same time, HBP has commercial goals: to use knowledge gained about the human brain to improve computer networks and robotics. “In the end, we want to develop new products out of this,” says Alois C. Knoll, chair of robotics and embedded systems at the Technical University of Munich.

To do him the Human Brain Project is building on the back of two previous brain computing tools, BrainScalesS and SpiNNaker, both of which try to mimic the power and speed of the brain as well as its low-energy consumption.

With Knoll leading the neurorobotics team, they are developing a simulation environment based on a cloud that allows scientists to operate virtual robots with nervous systems based on the brain. It would be extremely complex for every lab to have a computer that can do this onsite but with an online simulation interface, scientists can choose a body, an environment, and a task that can be connected to a brain and then experiment away.

“Essentially, we are virtualizing robotics research,” says Knoll, who hopes to advance robotics by “orders of magnitude.”

Six early versions of HPB tools have been available to the public since March of 2016. Many scientists can’t wait to use the HBP tools: “Our work will sit quite happily on one of their platforms,” says Kennedy, not currently a part of the project. Some still have reservations.

Like previously mentioned, other international big neuroscience cooperatives have fallen through a number of times before. A computational neuroscientist from the University of Geneva, Alexandre Pouget who signed the open letter criticizing HBP but isn’t currently a part of the project says “Trying to do it across all of the neuroscience might be too ambitious, but we shall see.”

At least one thing they don’t have to worry about is computing power, according to Boris Orth, the head of the High-Performance Computing in Neuroscience division of the Jülich Supercomputing Center. JuQueen is one of the supercomputers the HBP is currently using for research. JURON and JULIA were commissioned by Jülich as pilot supercomputers with added memory to aid neuroscientists wth simulations.

And if successful, these systems could one day actually fulfill Markram’s first vision of the Human Brain Project a biologically realistic virtual brain model in silico. However, Orth’s team only hope neuroscientists use the supercomputers, for now. “It’s a challenge sometimes to understand each other when we discuss requirements,” added Orth. “People think something should be straightforward to do, when in fact it’s not.”

Whatever challenges lie ahead, the Human Brain Project ends in 2023. “We don’t think brain research will be over when the Human Brain Project comes to an end,” adds Knoll. He, Amunts, and colleagues want to continue the project after it’s ten years as an independent entity that gains raise it’s own funds and have already submitted applications to make it so.

If approved, and with the right backing, the Human Brain Project, might one day be a physical center for the advancement of neuroscience, like a CERN for the brain. Then even more impressive discoveries might be made about the brain, it’s diseased, and ourselves.

More News to Read